Non-parametric tests

Klinkenberg

University of Amsterdam

7 oct 2022

Nonparametric tests

Parametric vs Nonparametric

| Attribute | Parametric | Nonparametric |

|---|---|---|

| distribution | normaly distributed | any distribution |

| sampling | random sample | random sample |

| sensitivity to outliers | yes | no |

| works with | large data sets | small and large data sets |

| speed | fast | slow |

Ranking

| rx | 1 | 2 | 3 | 4 | 5 | 6 |

| ry | 1 | 2 | 3 | 4 | 5 | 6 |

Ties

| x | 11 | 42.0 | 62.0 | 73 | 84.0 | 84.0 | 42.0 | 73.0 | 90 |

| order | 11 | 42.0 | 42.0 | 62 | 73.0 | 73.0 | 84.0 | 84.0 | 90 |

| ranks | 1 | 2.0 | 3.0 | 4 | 5.0 | 6.0 | 7.0 | 8.0 | 9 |

| ties | 1 | 2.5 | 2.5 | 4 | 5.5 | 5.5 | 7.5 | 7.5 | 9 |

\[\frac{2 + 3}{2} = 2.5, \frac{5 + 6}{2} = 5.5, \frac{7 + 8}{2} = 7.5\]

Procedure

- Assumption: independent random samples.

- Hypothesis:

\(H_0\) : equal population distributions (implies equal mean ranking)

\(H_A\) : unequal mean ranking (two sided)

\(H_A\) : higher mean ranking for one group. - Test statistic is difference between mean or sum of ranking.

- Standardise test statistic

- Calculate P-value one or two sided.

- Conclude to reject \(H_0\) if \(p < \alpha\).

Wilcoxon rank-sum test

Independent 2 samples

Wilcoxon rank-sum test

Developed by Frank Wilcoxon the rank-sum test is a nonparametric alternative to the independent samples t-test.

By first ranking \(x\) and then sum these ranks per group one would expect, under the null hypothesis, equal values for both groups.

After standardising this difference one can test using a standard normal distribution.

Sum the ranks

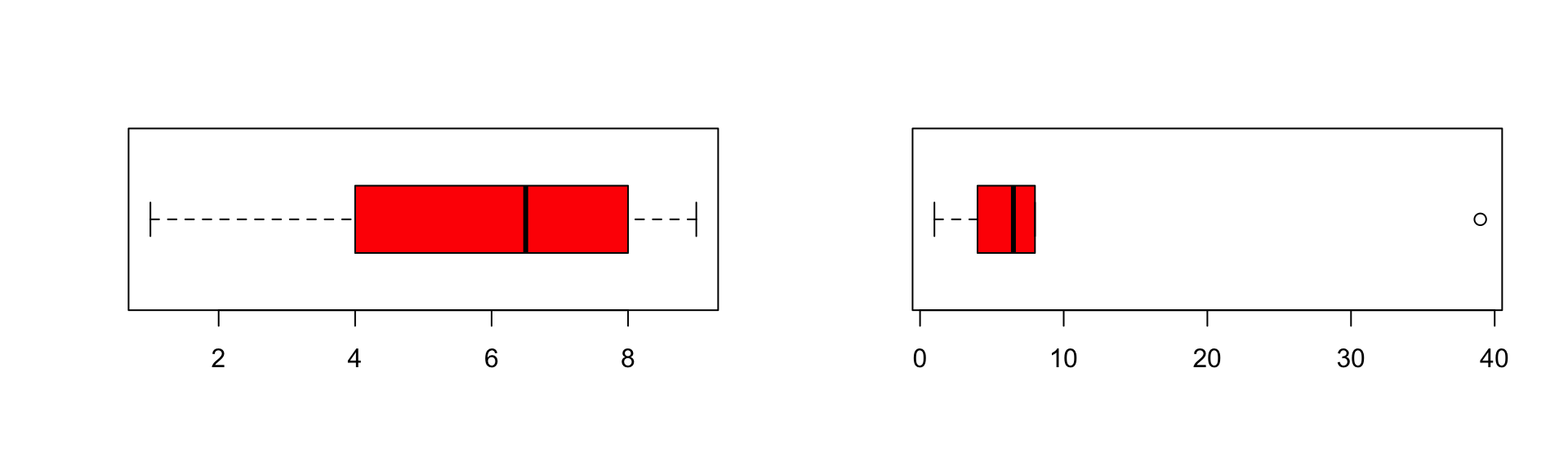

Simulate data

Example

Calculate the sum of ranks per group

So W is the lowest

\[W=min\left(\sum{R_1},\sum{R_2}\right)\]

Standardise W

To calculate the Z score we need to standardise the W. To do so we need the mean W and the standard error of W.

For this we need the sample sizes for each group.

Mean W

\[\bar{W}_s=\frac{n_1(n_1+n_2+1)}{2}\]

SE W

\[{SE}_{\bar{W}_s}=\sqrt{ \frac{n_1 n_2 (n_1+n_2+1)}{12} }\]

Calculate Z

\[z = \frac{W - \bar{W}}{{SE}_W}\]

Which looks a lot like

\[\frac{X - \bar{X}}{{SE}_X} \text{or} \frac{b - \mu_{b}}{{SE}_b} \]

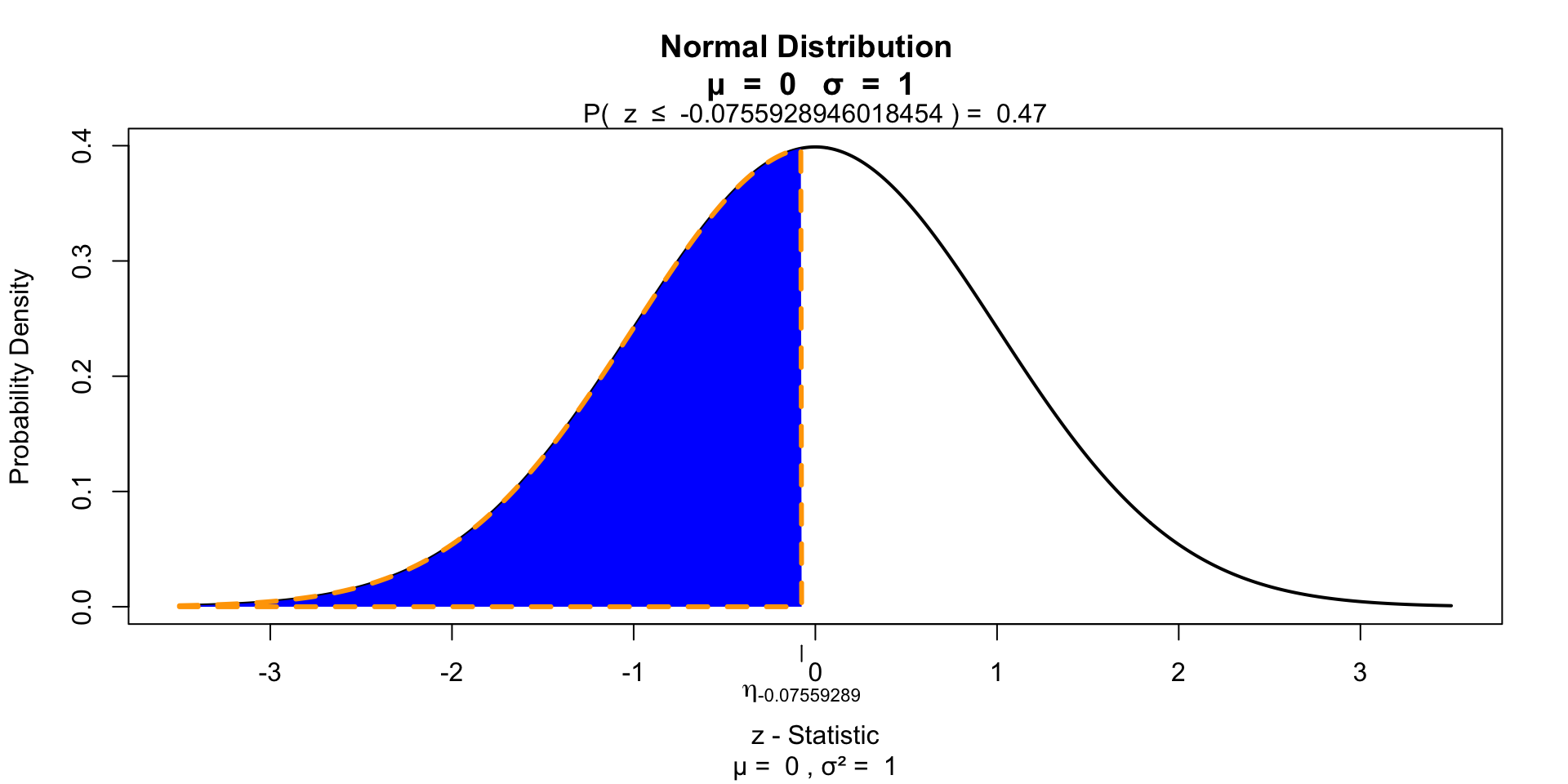

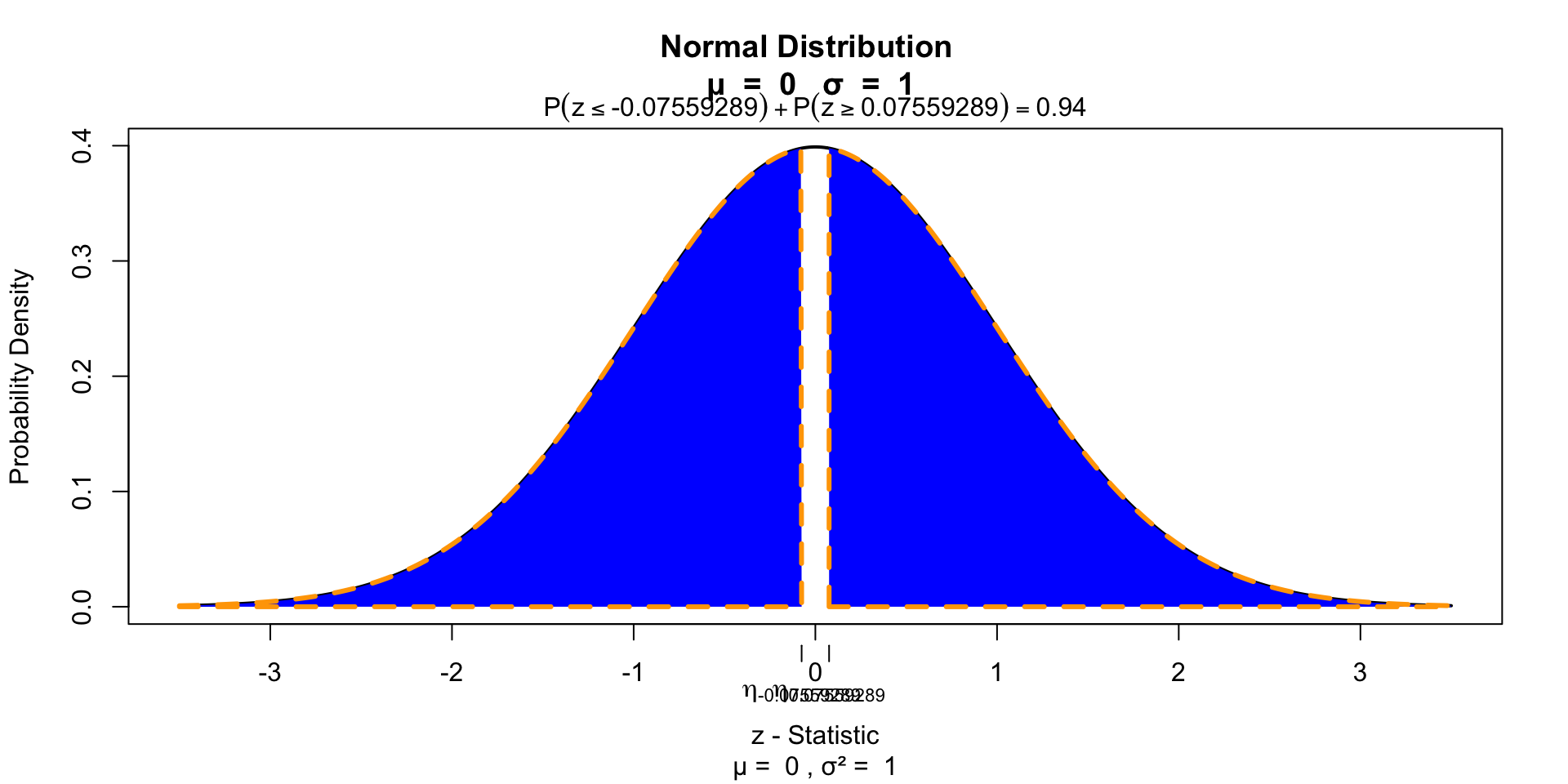

Test for significance 1 sided

Test for significance 2 sided

Effect size

\[r = \frac{z}{\sqrt{N}}\]

Mann–Whitney test

\[U = n_1 n_2 + \frac{n_1 (n_1 + 1)}{2} - R_1\]

\(\bar{U}\) and \({SE}_U\) for non tied ranks

\[\bar{U} = \frac{n_1 n_2}{2}\]

\[{SE}_U = \sqrt{\frac{n_1 n_2 (n_1 + n_2 + 1)}{12}}\]

Wilcoxon signed-rank test

Paired 2 samples

Wilcoxon signed-rank test

The Wilcoxon signed-rank test is a nonparametric alternative to the paired samples t-test. It assigns + or - signs to the difference between two repeated measures. By ranking the differences and summing these ranks for the positive group, the null hypothesis is tested that both positive and negative differences are equal.

Simulate data

n = 20

factor = rep(c("Ecstasy","Alcohol"),each=n/2)

dummy = ifelse(factor == "Ecstacy", 0, 1)

b.0 = 23

b.1 = 5

error = rnorm(n, 0, 1.7)

depres = b.0 + b.1*dummy + error

depres = round(depres)

data <- data.frame(factor, depres)

Ecstasy <- subset(data, factor=="Ecstasy")$depres

Alcohol <- subset(data, factor=="Alcohol")$depres

data <- data.frame(Ecstasy, Alcohol)Example

Calculate T

# Calculate difference in scores between first and second measure

data$difference = data$Ecstasy - data$Alcohol

# Calculate absolute difference in scores between first and second measure

data$abs.difference = abs(data$Ecstasy - data$Alcohol)

# Create rank variable with place holder NA

data$rank <- NA

# Rank only the difference scores

data[which(data$difference != 0),'rank'] <- rank(data[which(data$difference != 0),

'abs.difference'])

# Assign a '+' or a '-' to those values

data$sign = sign(data$Ecstasy - data$Alcohol)

# Add positive and negative rank to test if else

data$rank_pos = with(data, ifelse(sign == 1, rank, 0 ))

data$rank_neg = with(data, ifelse(sign == -1, rank, 0 ))The data

Calculate \(T_+\)

Calculate \(\bar{T}\) and \({SE}_{T}\)

\[\bar{T} = \frac{n(n+1)}{4}\]

\[{SE}_{T} = \sqrt{\frac{n(n+1)(2n+1)}{24}}\]

Calculate Z

\[z = \frac{T_+ - \bar{T}}{{SE}_T}\]

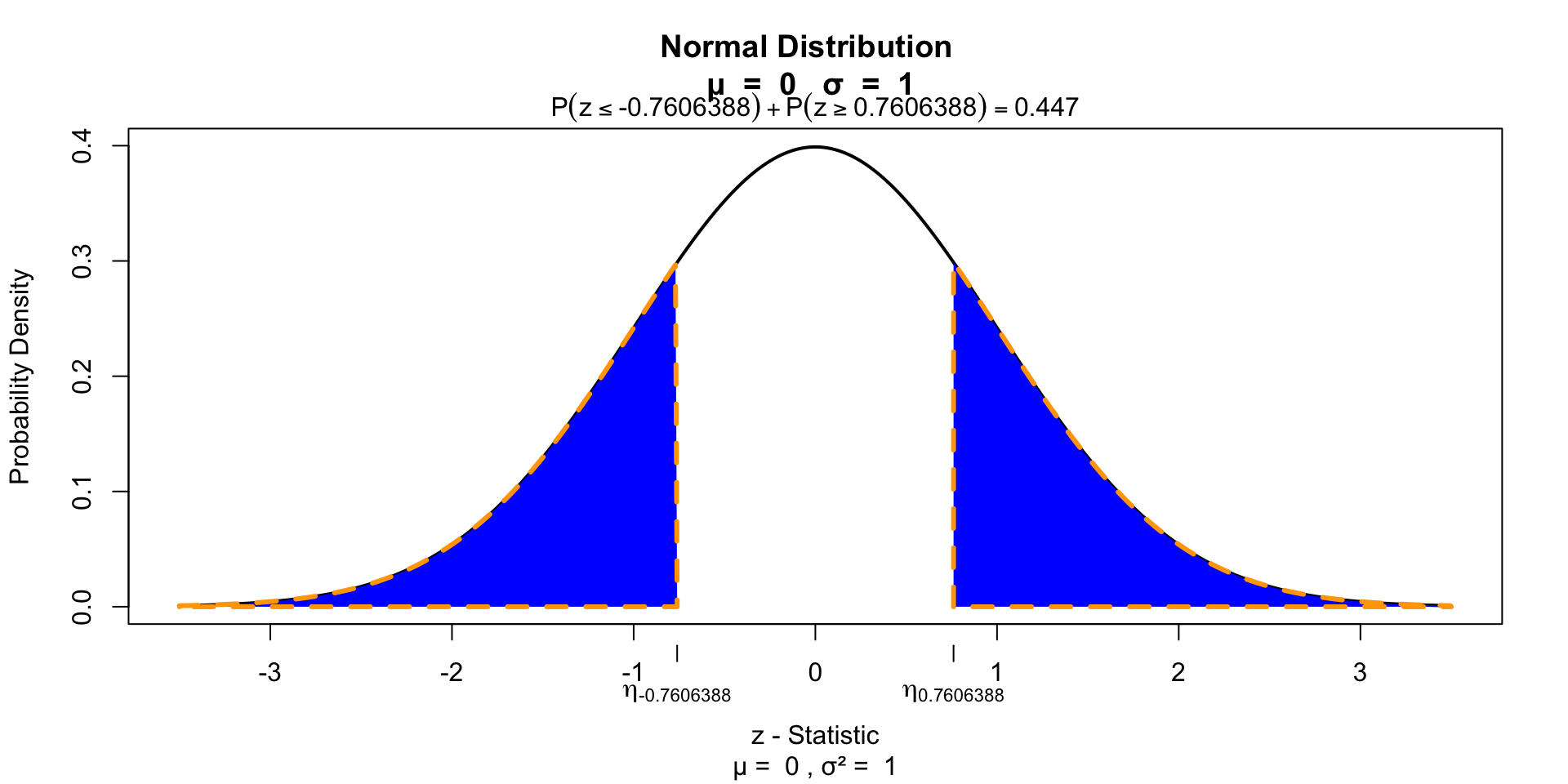

Test for significance

Effect size

\[r = \frac{z}{\sqrt{N}}\]

Here \(N\) is the number of observations.

Kruskal–Wallis test

Independent >2 samples

Kruskal–Wallis test

Created by William Henry Kruskal (L) and Wilson Allen Wallis (R), the Kruskal-Wallis test is a nonparametric alternative to the independent one-way ANOVA.

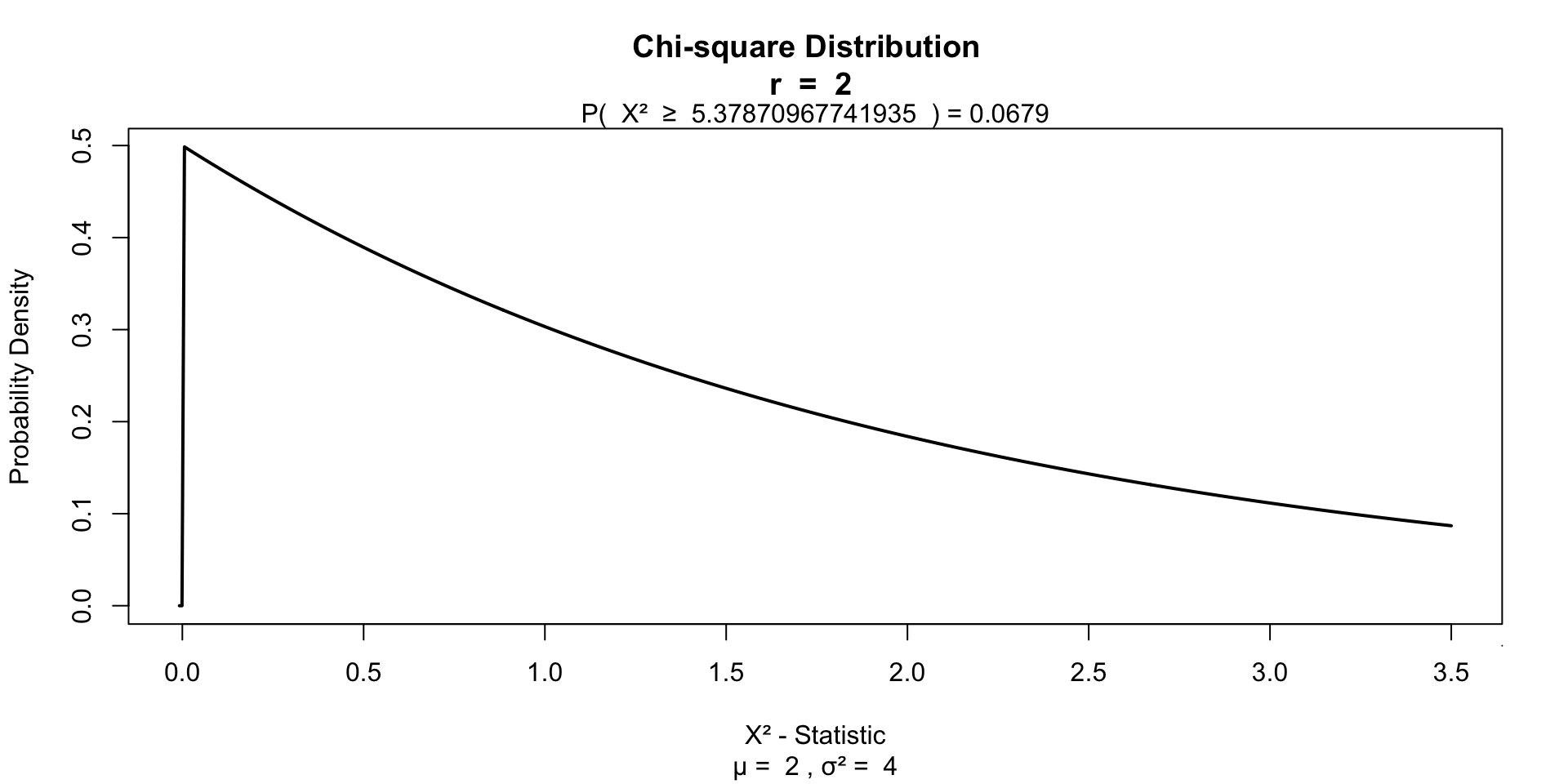

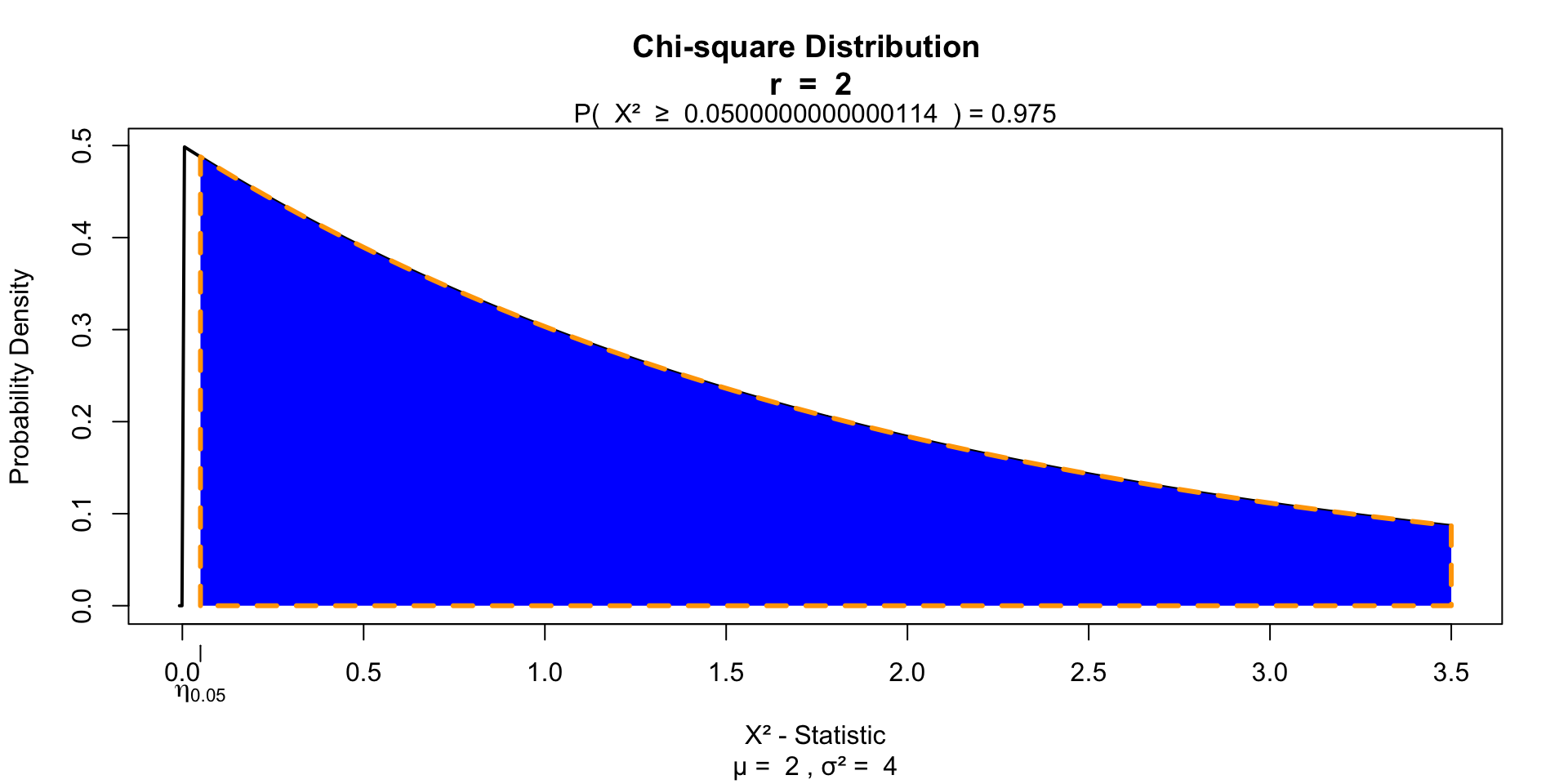

The Kruskal-Wallis test essentially subtracts the expected mean ranking from the calculated oberved mean ranking, which is \(\chi^2\) distributed.

Simulate data

n = 30

factor = rep(c("ecstasy","alcohol","control"), each=n/3)

dummy.1 = ifelse(factor == "alcohol", 1, 0)

dummy.2 = ifelse(factor == "ecstasy", 1, 0)

b.0 = 23

b.1 = 0

b.2 = 0

error = rnorm(n, 0, 1.7)

# Model

depres = b.0 + b.1*dummy.1 + b.2*dummy.2 + error

depres = round(depres)

data <- data.frame(factor, depres)Assign ranks

The data

Calculate H

\[H = \frac{12}{N(N+1)} \sum_{i=1}^k \frac{R_i^2}{n_i} - 3(N+1)\]

- \(N\) total sample size

- \(n_i\) sample size per group

- \(k\) number of groups

- \(R_i\) rank sums per group

Calculate H

Calculate H

\[H = \frac{12}{N(N+1)} \sum_{i=1}^k \frac{R_i^2}{n_i} - 3(N+1)\]

And the degrees of freedom

Test for significance

Friedman’s ANOVA

Paired >2 samples

Friedman’s ANOVA

Created by William Frederick Friedman the Friedman’s ANOVA is a nonparametric alternative to the repeated one-way ANOVA.

Just like the Kruskal-Wallis test, Friedman’s ANOVA, subtracts the expected mean ranking from the calculated observed mean ranking, which is also \(\chi^2\) distributed.

Simulate data

n = 30

factor = rep(c("ecstasy","alcohol","control"), each=n/3)

dummy.1 = ifelse(factor == "alcohol", 1, 0)

dummy.2 = ifelse(factor == "ecstasy", 1, 0)

b.0 = 23

b.1 = 0

b.2 = 0

error = rnorm(n, 0, 1.7)

# Model

depres = b.0 + b.1*dummy.1 + b.2*dummy.2 + error

depres = round(depres)

data <- data.frame(factor, depres)Simulate data

The data

Assign ranks

Rank each row.

The data with ranks

Calculate \(F_r\)

\[F_r = \left[ \frac{12}{Nk(k+1)} \sum_{i=1}^k R_i^2 \right] - 3N(k+1)\]

- \(N\) total number of subjects

- \(k\) number of groups

- \(R_i\) rank sums for each group

Calculate \(F_r\)

Calculate ranks sum per condition and \(N\).

Calculate \(F_r\)

\[F_r = \left[ \frac{12}{Nk(k+1)} \sum_{i=1}^k R_i^2 \right] - 3N(k+1)\]

And the degrees of freedom

Test for significance

End

Contact

Scientific & Statistical Reasoning